Mathematics for Machine Learning - Day 10

Linear Independence

The closure property guarantees that we end up with another vector in the same vector space. It's possible to find a set of vector which we can represent every vector space by adding them together and scaling them. This set of vectors is called a basis. (Honestly, I don't see when this paragraph is related to the topic, but I'll add it in just in case I'm too dumb to understand)

Linear combinations

Consider

Then:

With:

Dependence and Independence

If:

Then the result will always be true. This is the same as before, which in the last post is called "Trivial subspace", given the neutral element is required in a vector space.

So, if:

Then the vector space is linearly independent.

But, if:

Then the vector space is linearly dependent.

Notes:

Intuitively, a set of linearly independent vectors consist of no redundant vectors. i.e. If we remove any of the vectors from the set, we will lose something. Like my hope of finishing this book in under a year... Feels bad man :(

Discussion:

Honestly, the note from the author is quite confusing, is it really intuitive considering it's the requirement of a set which leads to a group, which leads to a vector space that a set needs to have distinct elements? So why add the note?

Example

This is where I spent an hour just on an example. I'll add a question in the end for you to see if, again..., I'm an idiot or math is mathing right now.

To obtain the information on the route from Nairobi to Kigali, we need either:

A. 506 km northwest and 374 km southwest B. 751 km west

Both A and B are linearly independent but if we use both A and B then it becomes linearly dependent, where B is linearly dependent from A given it's a linear combination.

So, from reading this, it's safe to assume that one of the condition for being linearly independent is:

If any change on a single vector in the vector space x1, x2, ..., xn will affect more than one element, then the vector space is linearly dependent.

What are the other properties/conditions?

- K vectors can only be either linearly dependent or linearly independent.

- If at least one of the vector x1, x2, ..., xk is 0, then they're linearly dependent.

- If at least one of the vector x1, x2, ..., xk is a duplicate of another vector, then they're linearly dependent. (Look at the

Discussionabove, this is the same thing) - The vectors

Are: a. Linearly dependent if (at least) one is a multiple of another vector. b. Linearly dependent if (at least) one is a linear combination of the other.

Tips

This is actually the fifth point but it feels more like a tip more than the properties

Firstly, transform the vectors into matrices

From a format like this:

Into something like this

Then, ignore the x vector like in an augmented matrix and we have this:

What kind of tip is this Terra?

Good question, honestly, I'm not sure if this is a tip or another explanation.

Notice that this is eerily familiar with the topic regarding particular and general solutions, I hope you enjoyed that part since after transforming the vectors into a matrix, we're going to transform it again into a Reduced-Row Echelon Form (RREF).

Only after in the form of RREF these are the tips I've summarized.

- If there exist a column that's empty on the main diagonal, then it's linearly dependent

- If there exist a column that's empty on the main diagonal but on the have non-zero values on the other rows, then it's linearly dependent

Meaning:

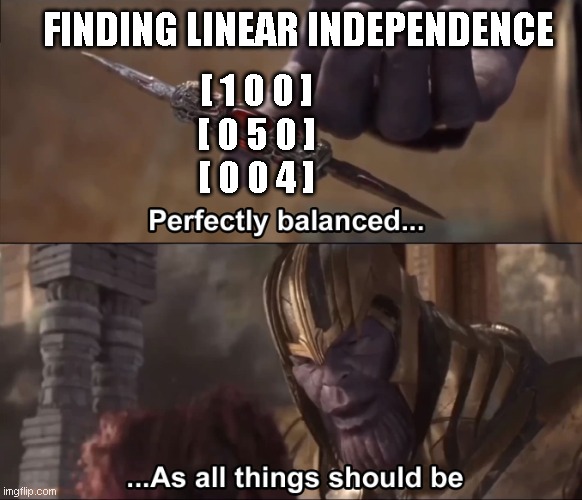

I'll give you a few matrices and I'll tell you why each of them are considered linearly dependent or independent.

Matrix 1:

Obviously this is linearly independent. Each non-zero element is on the main diagonal making them unique to each row.

Matrix 2:

Okay, so this is a linearly dependent vector, but why? is it because of the 2nd column or the 3rd column? Found your answer yet? it's the second column, given that the second column is 1/2 of the first column, this means it's a multiplication making it redundant.

P.S. I made the question column in numbers because people usually focus on numbers better, making them not see the answer text below if they really focus on answering the question. That's why only this part I made it in numbers :D Hope it worked for you

Matrix 3:

What about this? Well, it's linearly independent. Because though the third column contains non-zero value on the second row, there still isn't any linear combination that can make the third column.

Matrix 4:

This is a tic-tac-toe and the one playing circle won.

My Question

To the person reading this:

Hi, hope you're doing well :D

Let's say I have two vectors

Using the closure property:

This means that the addition between the vector x and y from the vector space v is also inside of the vector space.

With linear dependence being:

If I have the equation:

If both lambda = 1

Doesn't that mean it fulfills the linear dependence equation because of the closure property?

Then doesn't that mean all vector space are linear dependent?? I'm absolutely confused.

I have found that it's said to determine if it's linearly dependent or not, I need to solve this instead:

I think I'm confused because the example is regarding the redundancy and not much strengthening the concept of vector space.

If you can answer this, please do, I really need the explanation further :( Thank you!

Acknowledgement

I can't overstate this: I'm truly grateful for this book being open-sourced for everyone. Many people will be able to learn and understand machine learning on a fundamental level. Whether changing careers, demystifying AI, or just learning in general, this book offers immense value even for fledgling composer such as myself. So, Marc Peter Deisenroth, A. Aldo Faisal, and Cheng Soon Ong, thank you for this book.

Source: Deisenroth, M. P., Faisal, A. A., & Ong, C. S. (2020). Mathematics for Machine Learning. Cambridge: Cambridge University Press. https://mml-book.com